- [1] R. Chudley, R. Greeno, and K. Kovac. Chudley and Greeno’s Building Construction Handbook. Routledge, 2024. DOI: https: //doi.org/10.1201/9781003392996.

- [2] Q. Gao, V. Shi, C. Pettit, and H. Han, (2022) “Property valuation using machine learning algorithms on statistical areas in Greater Sydney, Australia" Land Use Policy 123: 106409. DOI: https: //doi.org/10.1016/j.landusepol.2022.106409.

- [3] A. Hammad, S. Abou Rizk, and Y. Mohamed, (2014) “Application of KDD techniques to extract useful knowledge from labor resources data in industrial construction projects" Journal of Management in Engineering 30: 05014011. DOI: https: //doi.org/10.1061/(ASCE)ME.1943-5479.0000280.

- [4] J. Liu, X. Zhao, and P. Yan, (2016) “Risk paths in inter national construction projects: Case study from Chinese contractors" Journal of construction engineering and management 142: 05016002. DOI: https: //doi.org/10.1061/(ASCE)CO.1943-7862.0001116.

- [5] P. N. Prasetyono, H. S. M. Suryanto, and H. Dani. “Predicting construction cost using regression techniques for residential building”. In: Journal of Physics: Conference Series. 1899. IOP Publishing, 2021, 012120. DOI: https: //doi.org/10.1088/1742-6596/1899/1/012120.

- [6] L. O. Ugur, R. Kanit, H. Erdal, E. Namli, H. I. Erdal, U. N. Baykan, and M. Erdal, (2019) “Enhanced predictive models for construction costs: A case study of Turkish mass housing sector" Computational Economics 53: 1403–1419. DOI: https: //doi.org/10.1007/s10614-018-9814-9.

- [7] A. Ashraf, M. Rady, and S. Y. Mahfouz, (2024) “Price prediction of residential buildings using random forest and artificial neural network" HBRC Journal 20: 23–41. DOI: https: //doi.org/10.1080/16874048.2023.2296036.

- [8] A.GurmuandM.P.Miri, (2025) “Machine learning regression for estimating the cost range of building projects" Construction Innovation 25: 577–593. DOI: https: //doi.org/10.1108/CI-08-2022-0197.

- [9] S. T. Hashemi, O. M. Ebadati, and H. Kaur, (2020) “Cost estimation and prediction in construction projects: A systematic review on machine learning techniques" SN Applied Sciences 2: 1703. DOI: https: //doi.org/10.1007/s42452-020-03497-1.

- [10] H. H. Elmousalami, (2019) “Comparison of Artificial Intelligence Techniques for Project Conceptual Cost Pre diction" arXiv preprint arXiv:1909.11637: DOI: https: //doi.org/10.48550/arXiv.1909.11637.

- [11] C. Zhan, Y. Liu, Z. Wu, M. Zhao, and T. W. S. Chow, (2023) “A hybrid machine learning framework for forecasting house price" Expert Systems with Applications 233: 120981. DOI: https: //doi.org/10.1016/j.eswa.2023.120981.

- [12] H. Zhang, Y. Li, and P. Branco, (2024) “Describe the house and I will tell you the price: House price prediction with textual description data" Natural Language Engineering 30: 661–695. DOI: https: //doi.org/10.1017/S1351324923000360.

- [13] M.Günnewig-Mönert and R. C. Lyons, (2024) “Housing prices, costs, and policy: The housing supply equation in Ireland since 1970" Real Estate Economics 52: 1075 1102. DOI: https: //doi.org/10.1111/1540-6229.12491.

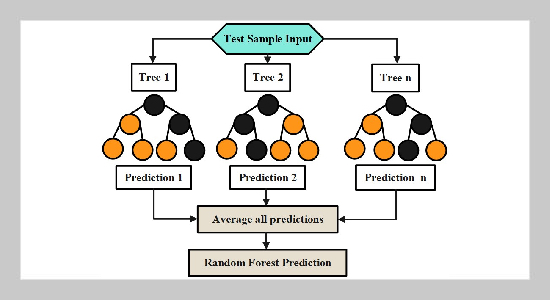

- [14] G. Biau, (2012) “Analysis of a random forests model" TheJournalofMachineLearningResearch13:1063 1095. .

- [15] Z. E. Mrabet, N. Sugunaraj, P. Ranganathan, and S. Abhyankar, (2022) “Random forest regressor-based approach for detecting fault location and duration in power systems" Sensors 22: 458. DOI: http: //dx.doi.org/10.3390/s22020458.

- [16] P. Nie, M. Roccotelli, M. P. Fanti, Z. Ming, and Z. Li, (2021) “Prediction of home energy consumption based on gradient boosting regression tree" Energy Reports 7: 1246–1255. DOI: https: //doi.org/10.1016/j.egyr.2021.02.006.

- [17] U. Singh, M. Rizwan, M. Alaraj, and I. Alsaidan, (2021) “A machine learning-based gradient boosting regression approach for wind power production forecasting: A step towards smart grid environments" Energies 14: 5196.

- [18] T. Toharudin, R. E. Caraka, I. R. Pratiwi, Y. Kim, P. U. Gio, A. D. Sakti, M. Noh, F. A. L. Nugraha, R. S. Pontoh, and T. H. Putri, (2023) “Boosting algorithm to handle unbalanced classification of PM 2.5 concentration levels by observing meteorological parameters in Jakarta-Indonesia using AdaBoost, XGBoost, Cat Boost, and Light GBM" IEEE Access 11: 35680–35696. DOI: https: //doi.org/10.1109/ACCESS.2023.3265019.

- [19] J. T. Hancock and T. M. Khoshgoftaar, (2020) “Cat Boost for big data: an interdisciplinary review" Journal of big data 7: 94. DOI: https: //doi.org/10.1186/s40537-020-00369-8.

- [20] J. Liang, Y. Bu, K. Tan, J. Pan, Z. Yi, X. Kong, and Z. Fan, (2022) “Estimation of stellar atmospheric parameters with light gradient boosting machine algorithm and principal component analysis" The Astronomical Journal 163: 153. DOI: https: //doi.org/10.3847/1538-3881/ac4d97.

- [21] S.Deng, J. Su, Y. Zhu, Y. Yu, and C. Xiao, (2024) “Fore casting carbon price trends based on an interpretable light gradient boosting machine and Bayesian optimization" Expert Systems with Applications 242: 122502. DOI: https: //doi.org/10.1016/j.eswa.2023.122502.

- [22] A. S. Al-Jawarneh, M. T. Ismail, and A. M. Awajan, (2021) “Elastic net regression and empirical mode decom position for enhancing the accuracy of the model selection" International Journal of Mathematical, Engineering and Management Sciences 6: 564. DOI: https: //doi.org/10.33889/IJMEMS.2021.6.2.034.

- [23] S. I. Altelbany, (2021) “Evaluation of Ridge, Elastic Net and Lasso Regression Methods in Precedence of Multi collinearity Problem: A Simulation Study." Journal of Applied Economics & Business Studies (JAEBS) 5: DOI: https: //doi.org/10.34260/jaebs.517.

- [24] P. J. García-Nieto, E. García-Gonzalo, and J. P. Paredes-Sánchez, (2021) “Prediction of the critical temperature of a superconductor by using the WOA/MARS, Ridge, Lasso and Elastic-net machine learning techniques" Neural Computing and Applications 33: 17131 17145. DOI: https: //doi.org/10.1007/s00521-021-06304-z.

- [25] M. Amjad, I. Ahmad, M. Ahmad, P. Wróblewski, P. Kami´nski, and U. Amjad, (2022) “Prediction of pile bearing capacity using XG Boost algorithm: modeling and performance evaluation" Applied Sciences 12: 2126. DOI: https: //doi.org/10.3390/app12042126.

- [26] A. I. A. Osman, A. N. Ahmed, M. F. Chow, Y. F. Huang, and A. El-Shafie, (2021) “Extreme gradient boosting (Xgboost) model to predict the groundwater levels in Selangor Malaysia" Ain Shams Engineering Journal 12: 1545–1556. DOI: https: //doi.org/10.1016/j.asej.2020.11.011.

- [27] W.-T. Pan, (2012) “A new fruit fly optimization algorithm: taking the financial distress model as an example" Knowledge-Based Systems 26: 69–74. DOI: https: //doi.org/10.1016/j.knosys.2011.07.001.

- [28] R. Sonia, J. Joseph, D. Kalaiyarasi, N. Kalyani, A. S. G. Gupta, G. Ramkumar, H.S. Almoallim, S. A. Alharbi, andS. S. Raghavan, (2024) “Segmenting and classifying skin lesions using a fruit fly optimization algorithm with a machine learning framework" Automatika: ˇcasopis za automatiku, mjerenje, elektroniku, raˇcunarstvo i komunikacije 65: 217–231. DOI: https: //doi.org/10.1080/00051144.2023.2293515.

- [29] D. Chicco, M. J. Warrens, and G. Jurman, (2021) “The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation" Peerj computer science 7: e623. DOI: https: //doi.org/10.7717/peerj-cs.623.

- [30] P. Dumre, S. Bhattarai, and H. K. Shashikala. “Optimizing linear regression models: A comparative study of error metrics”. In: 2024 4th International Conference on Technological Advancements in Computational Sciences (ICTACS). IEEE, 2024, 1856–1861. DOI: https: //doi.org/10.1109/ICTACS62700.2024.10840719.

- [31] T. O. Hodson, (2022) “Root mean square error (RMSE) or mean absolute error (MAE): When to use them or not" Geoscientific Model Development Discussions 2022: 1–10. DOI: https: //doi.org/10.5194/gmd-15-5481-2022.

- [32] J. Terven, D. M. Cordova-Esparza, A. Ramirez Pedraza, E. A. Chavez-Urbiola, and J. A. Romero Gonzalez, (2023) “Loss functions and metrics in deep learning" arXiv preprint arXiv:2307.02694: DOI: https: //doi.org/10.48550/arXiv.2307.02694.