- [1] R. Hamdani and I. Chihi, (2025)“Adaptivehuman computerinteractionforindustry5.0:Anovelconcept, with comprehensive reviewand empirical validation" ComputersinIndustry168:104268.DOI:10.1016/j.compind.2025.104268.

- [2] Z. Lyu, (2024) “State-of-the-art human-computer interaction in metaverse" International Journal of Human–Computer Interaction 40(21): 6690–6708. DOI: 10.1080/10447318.2023.2248833.

- [3] J. G. C. Ramírez, (2024) “Natural language processing advancements: Breaking barriers in human-computer interaction" Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023 3(1): 31–39. DOI: 10.60087/jaigs.v3i1.63.

- [4] J. Yu, Z. Lu, S. Yin, and M. Ivanovi´c, (2024) “News recommendation model based on encoder graph neural network and bat optimization in online social multimedia art education" Computer Science and Information Systems 21(3): 989–1012. DOI: 10.2298/CSIS231225025Y.

- [5] K. Chen and W. Tao, (2017) “Once for all: a two-flow convolutional neural network for visual tracking" IEEE Transactions on Circuits and Systems for Video Technology 28(12): 3377–3386. DOI: 10.1109/TCSVT. 2017.2757061.

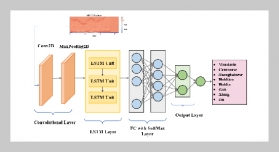

- [6] N. Liu and J. Han, (2018) “A deep spatial contextual long-term recurrent convolutional network for saliency detection" IEEE Transactions on Image Processing 27(7): 3264–3274. DOI: 10.1109/TIP.2018.2817047.

- [7] B. Zhang, L. Zhao, and X. Zhang, (2020) “Three dimensional convolutional neural network model for tree species classification using airborne hyperspectral images" Remote Sensing of Environment 247: 111938. DOI: 10.1016/j.rse.2020.111938.

- [8] H. Wang, Y. Liu, X. Zhen, and X. Tu, (2021) “Depression speech recognition with a three-dimensional convolutional network" Frontiers in human neuroscience 15: 713823. DOI: 10.3389/fnhum.2021.713823.

- [9] T. Huynh-The, T.-V. Nguyen, Q.-V. Pham, D. B. da Costa, and D.-S. Kim, (2022) “MIMO-OFDM modulation classification using three-dimensional convolutional network" IEEE Transactions on Vehicular Technology 71(6): 6738–6743. DOI: 10.1109/TVT.2022.3159254.

- [10] K. Zhang, Y. Guo, X. Wang, J. Yuan, and Q. Ding, (2019) “Multiple feature reweight densenet for image classification" IEEE access 7: 9872–9880. DOI: 10.1109/ ACCESS.2018.2890127.

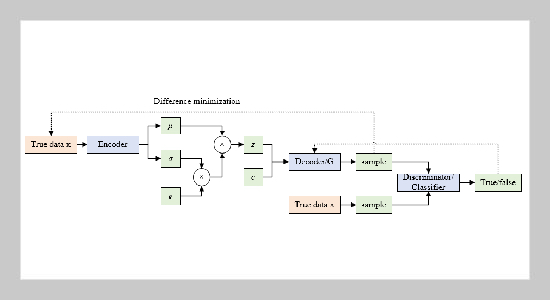

- [11] S. Yin, H. Li, A. A. Laghari, T. R. Gadekallu, G. A. Sampedro, and A. Almadhor, (2024) “An Anomaly Detection Model Based on Deep Auto-Encoder and Capsule Graph Convolution via Sparrow Search Algorithm in 6G Internet of Everything" IEEE Internet of Things Journal 11(18): 29402–29411. DOI: 10.1109/JIOT.2024.3353337.

- [12] Y. Pang and Y. Liu. “Conditional generative adversarial networks (CGAN) for aircraft trajectory prediction considering weather effects”. In: AIAA Scitech 2020 Forum. 2020, 1853. DOI: 10.2514/6.2020-1853.

- [13] T. Cemgil, S. Ghaisas, K. Dvijotham, S. Gowal, and P. Kohli, (2020) “The autoencoding variational autoencoder" Advances in Neural Information Processing Systems 33: 15077–15087. DOI: 10.1117/12.2512660.6013931859001.

- [14] J. Yu, H. Li, S.-L. Yin, and S. Karim, (2020) “Dynamic gesture recognition based on deep learning in human-to computer interfaces" Journal of Applied Science and Engineering 23(1): 31–38. DOI: 10.6180/jase.202003_ 23(1).0004.

- [15] M. Segu, A. Tonioni, and F. Tombari, (2023) “Batch normalization embeddings for deep domain generalization" Pattern Recognition 135: 109115. DOI: 10.1016/j.patcog.2022.109115.

- [16] S. R. Bose and V. S. Kumar, (2020) “Efficient inception V2 based deep convolutional neural network for real-time hand action recognition" IET Image Processing 14(4): 688–696. DOI: 10.1049/iet-ipr.2019.0985.

- [17] G. Papakostas, Y. Boutalis, D. Karras, and B. Mertzios, (2010) “Efficient computation of Zernike and Pseudo Zernike moments for pattern classification applications" Pattern Recognition and Image Analysis 20: 56–64. DOI: 10.1134/S1054661810010050.

- [18] J. Wan, C. Lin, L. Wen, Y. Li, Q. Miao, S. Escalera, G. Anbarjafari, I. Guyon, G. Guo, and S. Z. Li, (2020) “ChaLearn looking at people: Iso GD and Con GD large scale RGB-D gesture recognition" IEEE Transactions on Cybernetics 52(5): 3422–3433. DOI: 10.1109/TCYB.2020.3012092.

- [19] Y. Tie, X. Zhang, J. Chen, L. Qi, and J. Tie, (2023) “Dynamic Gesture Recognition Based on Deep 3DNatural Networks" Cognitive Computation 15(6): 2087–2100. DOI: 10.1007/s12559-023-10177-w.

- [20] A. Cuadra, M. Wang, L. A. Stein, M. F. Jung, N. Dell, D. Estrin, and J. A. Landay.“Theillusion of empathy? notes on displays of emotion in human-computer interaction”. In: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. 2024, 1–18. DOI: 10.1145/3613904.3642336.

- [21] N. Al Mudawi, H. Ansar, A. Alazeb, H. Aljuaid, Y. AlQahtani, A. Algarni, A. Jalal, and H. Liu, (2024) “Innovative healthcare solutions: robust hand gesture recognition of daily life routines using 1D CNN" Frontiers in Bioengineering and Biotechnology 12: 1401803. DOI: 10.3389/fbioe.2024.1401803.

- [22] Y. Xiong, Z. Huo, J. Zhang, Y. Liu, D. Yue, N. Xu, R. Gu, L. Wei, L. Luo, M. Chen, etal., (2024)“Triboelectric in-sensor deep learning for self-powered gesture recognition toward multifunctional rescue tasks" Nano Energy 124: 109465. DOI: 10.1016/j.nanoen.2024.109465.

- [23] R. Rastgoo, K. Kiani, S. Escalera, and M. Sabokrou, (2024) “Multi-modal zero-shot dynamic hand gesture recognition" Expert Systems with Applications 247: 123349. DOI: 10.1016/j.eswa.2024.123349.

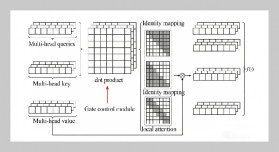

- [24] A. S. M. Miah, M. A. M. Hasan, Y. Tomioka, and J. Shin, (2024) “Hand gesture recognition for multi-culture sign language using graph and general deep learning net work" IEEE Open Journal of the Computer Society 5: 144–155. DOI: 10.1109/OJCS.2024.3370971.

- [25] L. I. B. López, F. M. Ferri, J. Zea, Á. L. V. Caraguay, and M. E. Benalcázar, (2024) “CNN-LSTM and post processing for EMG-based hand gesture recognition" In telligent Systems with Applications 22: 200352. DOI: 10.1016/j.iswa.2024.200352.

- [26] Z. Chen, W. Huang, H. Liu, Z. Wang, Y. Wen, and S. Wang, (2024) “ST-TGR: Spatio-temporal representation learning for skeleton-based teaching gesture recognition" Sensors 24(8): 2589. DOI: 10.3390/s24082589.